3 min read

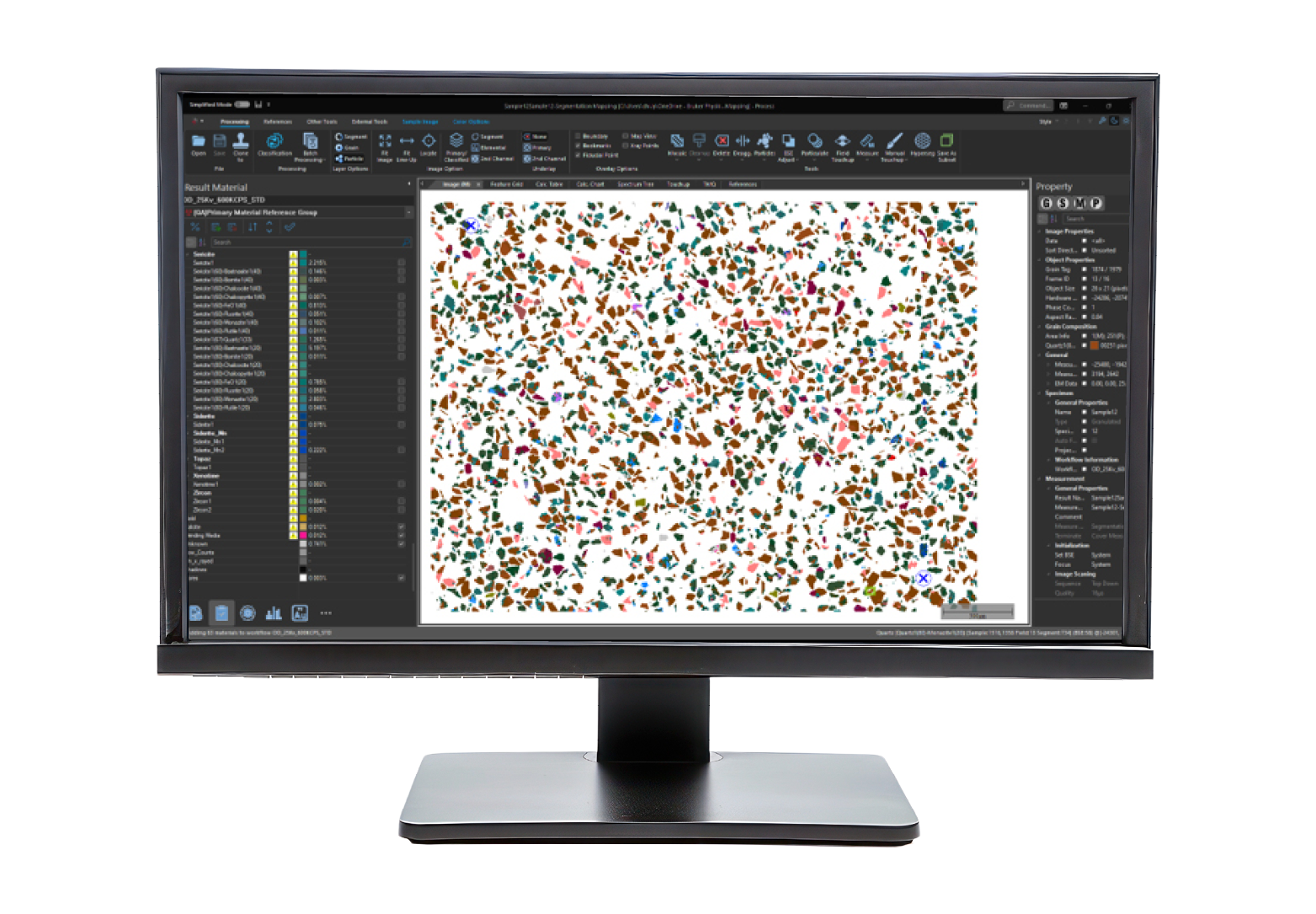

8 Practical Lessons from a Particle Analysis Webinar

Jan 29, 2026 10:09:21 AM

The most revealing part of a technical webinar often comes after the slides — in the questions. During a recent webinar on particle analysis, a series of short but thoughtful questions surfaced. Individually, they touched on size metrics, brightness and contrast, segmentation, automation, and data integration. Taken together, they paint a clear picture of what users actually need from modern particle analysis workflows.

Particle Size Metrics Are Expected - But Not the End Goal

1

1

Can we get D10, D50, and D90?

The answer is yes — and much more.

But what this question really signals is not a request for another number. It reflects a broader expectation: SEM-based particle analysis should integrate naturally with how materials are already described and compared.

Size distributions are a baseline requirement. The real value emerges when size can be connected to:

- Shape

- Mineralogy

- Lithotype

- Inclusion type

- Surface structure

That is when particle analysis moves from reporting numbers to understanding materials.

Brightness, Contrast and Segmentation Are Central — Not Secondary

2

2

Several questions focused on brightness and contrast(B/C):

- Does segmentation depend on B/C?

- Can different B/C settings be saved per sample?

- What happens in heterogeneous samples?

- Does automatic B/C cause problems?

These are not software questions — they are data quality questions. Brightness and contrast define the grayscale dynamic range used for:

- Particle detection

- Segmentation

- X-ray point placement

- Classification thresholds

If brightness and contrast are unstable, segmentation becomes unstable.

If segmentation is unstable, statistics lose meaning.

The recurring theme was clear: reproducibility matters more than automation speed.

Channeling, Contrast and When Artifacts Become Information

3

3

Another subtle but important topic was channelingcontrast in BSE imaging.

Channeling is often treated as a problem:

- It can affect BSE-based classification

- It can introduce contrast unrelated to chemistry

But users also recognize that:

- Channeling can help differentiate grains

- It can assist in grain size or texture analysis

This highlights a recurring truth in SEM-based particle analysis: many “artifacts” are only artifacts if you are not trying to measure them.

The key is knowing when to suppress an effect — and when to use it deliberately.

Overlapping Particles Are the Rule, Not the Exception

4

4

Questions about overlapping particles, inclusions withininclusions, and agglomerates came up repeatedly.

Real samples are messy:

- Particles overlap

- Precipitates grow on other particles

- Agglomerates dominate statistics

Handling this requires:

- Robust geometrical separation

- Careful control of histogram dynamic range

- Sometimes, multiple imaging signals (SE + BSE, or BSE + CL)

- In difficult cases, machine-learning-based de-agglomeration

The takeaway is simple: segmentation strategy must match sample reality — not idealized assumptions.

Automation Must Survive Heterogeneous Samples

5

5

A recurring concern was what happens during longautomated runs:

- Does the system adjust B/C between samples?

- Can it maintain stability within a sample?

- Can settings be changed mid-run?

These questions all point to the same operational reality: heterogeneous samples are the norm, not the exception.

Automation that assumes uniform contrast, particle density, or composition will eventually fail. Robust workflows acknowledge variability and actively manage it.

Holders, Templates and “Unexciting” Features

6

6

Several questions focused on what might seem likeunexciting details:

- Custom holders

- Saving settings per sample

- Reporting formats

- Stop criteria

- Resume-after-pause functionality

Individually, these topics sound mundane. Collectively, they define whether a system works at scale.

High-volume particle analysis lives or dies on:

- How fast jobs can be set up

- How reliably they run unattended

- How easy it is to audit and report results

These are not edge cases — they are the core of industrial particle analysis.

Particle Analysis Is Increasingly About Data Integration

7

7

One of the more forward-looking questions involvedcorrelating particle analysis with nanoindentation mapsand other datasets.

This points to a clear trend:

- Particle analysis is no longer isolated

- Users want to align morphology, chemistry, and mechanical properties

- Spatial correlation across techniques is becoming more important

SEM-based particle analysis increasingly acts as a spatial reference framework for other measurements.

So, How Many Particles Are Enough?

8

8

How many images or particles are required for repeatable PSD?

The honest answer remains: it depends.

What matters is that:

- Statistics are monitored during acquisition

- Users can see when distributions stabilize

- Stop criteria are flexible (particles, frames, time)

Good particle analysis workflows help users know when to stop — not guess.